Silos and war-zones

Most organisations, large or small, often make the mistake of seeing the delivery of a system capable of creating a parameterised report, pivot table or pretty dashboard as being a success. For many the mere delivery of these systems has been a long and bitter struggle. To really critically examine these systems you have to ask what value do they deliver?

In many cases a they will deliver a piece of information to a group of users, "Whats the backlog in my call centre?", "how many visitors did my website have?" while these are all of some value, in almost all circumstances they deliver little or no business value. To deliver business value a system has to be able to adjust or inform the business in a way that allow it to change its behaviour to save money, increase revenue or avoid regulatory censure. Where these actions add most value is by aligning with the unique selling point or competitive advantage that the organisation is strategically targeting.

If an organisation has targeted its USP on the quality of its customer service, the call centre waiting time is critical, but just informing on what that waiting time is adds no value, is is the action that is then taken that adds the value. A remedial action can be taken - bring in more all centre staff, or an attempt can be made to find the route cause and fix that, "do we have a services problem?". The remedial actions are the levers that management has to control business processes, but to effectively use these and not just react to an issue and treat the symptoms, its important to give the responsible management the tools to discover the root cause.

While a BI solution can be of some use here, there is major flaw. In almost all circumstances you need to know ahead of time what the nature of the cause might be, allowing a system to process the data to create a metric that can then be used in decision making. For closed problems where the number of possible causes is fixed or limited this can work. However for open or unlimited problems this can be futile, and in fact the mere effort of analysing the problem can cause analysis paralysis resulting in the system never being built in the first place.

Solutions to some of these issue started to appear in the mid zeros and became mainstream about 4 years ago, in the form of Data Discovery platforms. These are not the right solution for all information problems, for example if I was creating a solution to monitize data or be a recommendation engine, I'd be developing code. If I wanted to forecast the weather I'd be developing code. But if I wanted to know "why" am I seeing something and I had a good range of data feeds which encompass the problem I'd be reaching for a Data Discovery tool.

Why Data Discovery?

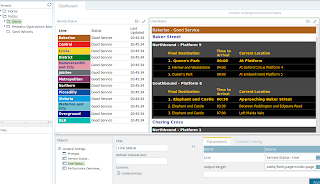

The value of a Data Discovery tool is that it allows you to take a wide range of data sources, some raw, some pre-processed, combine them freely and perform transformations and calculations to produce an analysis. Sometimes these can be just numerical or visual analysis or enhanced by adding statistical modelling for groupings and classifications. These are tools that allow users to explore and "see" there data. The biggest change to this category of tools has been enabled by the arrival of Big Data - but very few tools are genuinely exploiting the potential of the capability.

Many of the first generation Data Discovery tools only implement connectivity to Big Data solutions, typically via a SQL interface potentially with some sort of accelerator such as a pre-aggregation engine or cache, while this allows them to read data out of a Big Data source, its not really enabling Data Discovery in Big Data. Another solution is needed to prepare the data and make it available and set the security model, nearly always an IT function.

So while these solutions connect to Big Data, they themselves are no more capable of scaling than they were - still being limited to the data that you can fit in a single machine. In effect to use them you have to make Big Data small, and crucially this must happen before the data comes into the Data Discovery tool. So while these tools make some grand claims for enabling Big Data Discovery the reality is rather more limited - it may look, feel and smell like your favourite Data Discovery tool, but its functionality has been reduced to that of a BI reporting tool.

To be really able to claim to deliver the nirvana of Data Discovery in Big Data a solution has to not just connect to, but actually utilise the storage and processing capabilities of the Big Data platform to enable the blending and calculations to take place in the platform at scale. In Hadoop terms this means using the storage and the native scalable processing APIs such as MapReduce and Spark and enabling business users not familiar with coding to use them.

Options?

So at this point we've reached the conclusion that to add real business value needs Data Discovery, and to deliver business value at scale requires Big Data Discovery. So what are the options? The core capabilities of Data Discovery are the ability to combine and transform data in any way required and to rapidly visualise this data. If you take those as the requirements, there is really only one option that delivers the scale and agility to deliver business value. Platfora is the leading platform for Data Discovery on Big data, and its quite clear why. Its not trying to take a legacy product, and perhaps a compromised ROLAP engine, and patch it up for Big Data, it is a genuine from the ground up product built for Big Data. Crucially its not dependent on the IT department for preparing data in Hadoop, but actually places this crucial capability in the hands of the end users - it is utilising the entire Hadoop platform for Data Discovery, along with the massive scale this implies.

Conclusion

There is a quiet revolution happening in the world of information solutions, by implementing Data Discovery solutions in Hadoop such as Platfora, many organisations are now leapfrogging their competitors. By focusing on the end goal of delivering business value and making the technology fit the high level business processes rather than delivering intermediate solutions that themselves feed into numerous other processes, Platfora customers are increasing their agility and competitive advantage over their competitors. When IT deliver platforms that enable Business users to genuinely self-serve without IT intervention then the business users keep going back for more and you develop a mature highly functional organisation.

As for everyone else? Many are now beginning to see the light, but some are determined to keep banging the rocks together, ably assisted by the snake oil sellers taking legacy products and adding endless fixes and work arounds to make something appear to be Big Data. Time will tell which is the right way to go, I'm pretty confident which way it will go.